Taleo Error Invalid Data Review All Error Messages Below to Correct Your Data

17 May EXTRACTING DATA FROM TALEO

Over the past few years nosotros accept seen companies focusing more and more than on Human Resource / Human being Capital activities. This is no surprise, because that nowadays a large number of businesses depend more than on people skills and creativity than on mechanism or capital, so hiring the right people has become a critical procedure. As a consequence, more and more emphasis is put on having the right software to back up HR/HC activities, and this in turn leads to the necessity of building a BI solution on elevation of those systems for a right evaluation of processes and resources. I of the most unremarkably used 60 minutes systems is Taleo, an Oracle product that resides in the deject, so there is no direct access to its underlying information. Nevertheless, most BI systems are still on-premise, then if we want to use Taleo data, nosotros demand to extract it from the deject first.

1. Taleo information extraction methods

Equally mentioned before, there is no way of direct access to Taleo data; nevertheless, there are several means to extract information technology, and once extracted we will exist able to utilize it in the BI solution:

| • | Querying Taleo API |

| • | Using Cloud Connector |

| • | Using Taleo Connect Customer |

API is very robust, just the most circuitous of the methods, since it requires a separate application to be written. Usually, depending on the configuration of the BI system, either Oracle Cloud Connector, Taleo Connect Client or a combination of both is used.

|

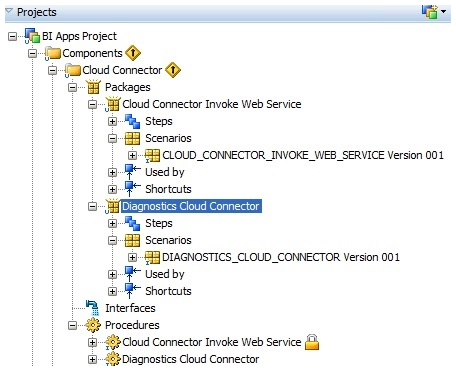

| Figure 1: Cloud connector in ODI objects tree |

Oracle Cloud Connector is a component of OBI Apps, and essentially information technology's Java code that replicates Taleo entities / tables. It'due south also easy to utilise: simply by creating whatsoever Load Plan in BIACM using Taleo as the source system, a series of calls to Cloud Connector are generated that effectively replicate Taleo tables to local schema. Although information technology works well, it has ii significant disadvantages:

| • | It's only available every bit a component of BI Apps |

| • | It doesn't excerpt Taleo UDFs |

And then fifty-fifty if we have BI Apps installed and we employ Deject Connector, there will be some columns (UDFs) that will not get extracted. This is why the use of Taleo Connect Customer is frequently a must.

2. Taleo Connect Client

Taleo Connect Client is a tool that is used to export or import information from / to Taleo. In this article we're going to focus on extraction. It tin extract any field, including UDFs, so information technology can be used in combination with BI Apps Cloud Connector or, if that'south non available, then every bit a unique extraction tool. There are versions for both Windows and Linux operating systems. Permit'south look at the Windows version first.

Part 1 – Installation & running:

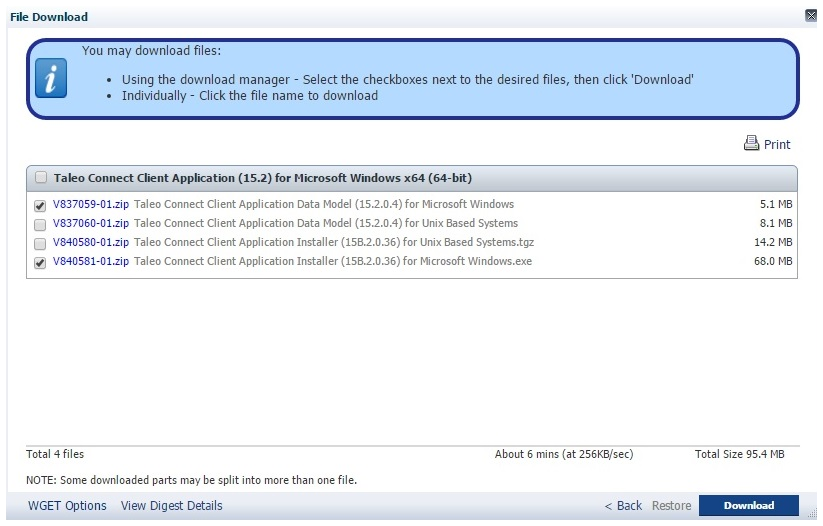

Taleo Connect Client tin can be downloaded from the Oracle e-delivery website; but type Taleo Connect Client into the searcher and you will see it on the list. Choose the necessary version, select Awarding Installer and Awarding Data Model (required!), remembering that it must lucifer the version of the Taleo application you will be working with; then just download and install. Of import – the Data Model must be installed earlier the application is installed.

|

| Effigy ii: Downloading TCC for Windows |

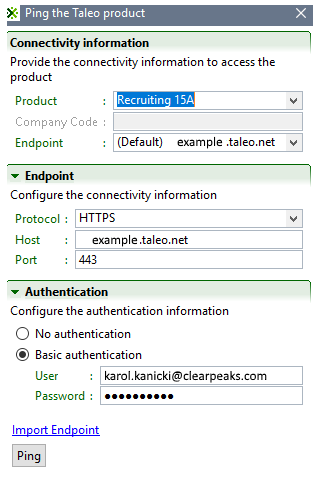

After TCC is installed, we run it, providing the necessary credentials in the initial screen:

|

| Figure 3: Taleo Connect Customer welcome screen |

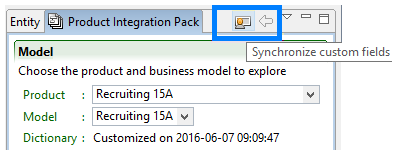

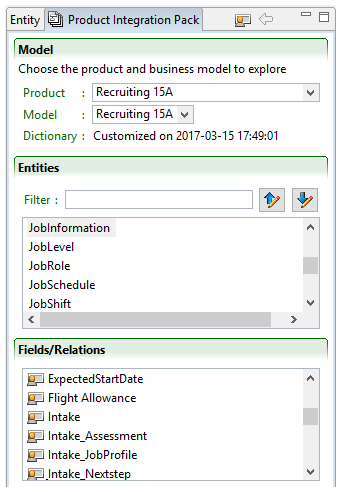

And then, later clicking on 'ping', we connect to Taleo. The window that we meet is initially empty, but we can create or execute new extracts from it. But earlier going on to this pace, let's detect out how to run across the UDFs: in the right panel, go to the 'Product integration pack' tab, also selecting the right product and model. And then, in the Entities tab, we tin see a list of entities / tables, and in fields / relations, we can see columns and relations with other entities / tables (through foreign keys). After the offset run, you volition probably have some UDFs that are not on the list of fields / relations available. Why is this? Because what we initially run into in the field list are only Taleo out-of-the-box fields, installed with the Data Model installer. Simply don't worry, this can hands be stock-still: use the 'Synchronize custom fields' icon (highlighted on the screenshot). Later clicking on it you will be taken to a log-on screen where you'll have to provide log-on credentials again, and later on clicking on the 'Synchronize' push button, the UDFs will be retrieved.

|

| Figure four: Synchronizing out-of-the-box model with User Defined Fields |

|

| Figure v: Synchronized listing of fields, including some UDFs (marked with 'person' icon) |

Function 2 – Preparing the extract:

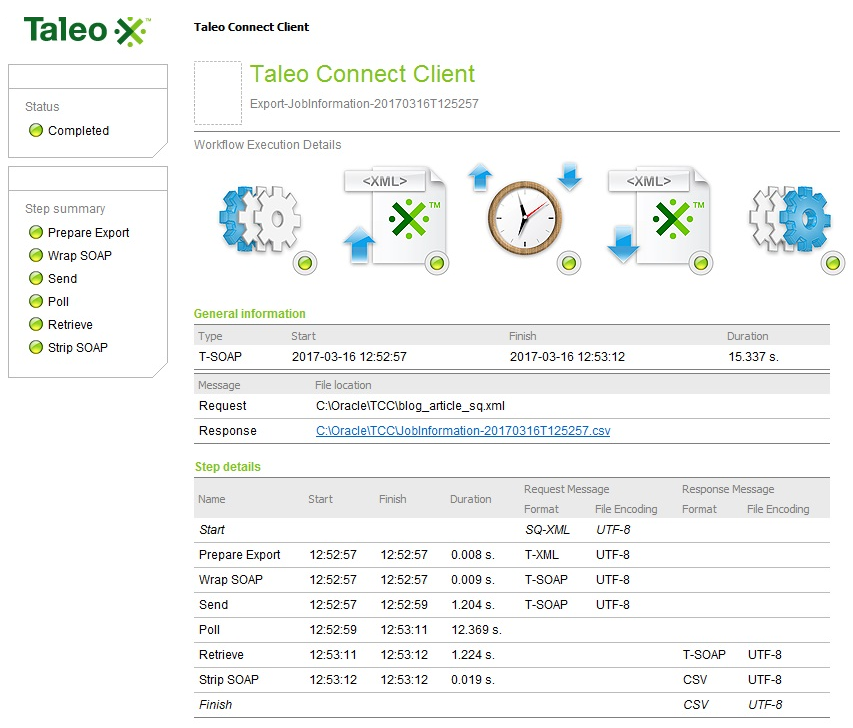

In one case we have all the required fields bachelor, preparing the excerpt is pretty straightforward. Go to File->New->New Export Magician, then cull the desired Entity, and click on Finish. At present, in the General window, set Consign Mode to 'CSV-Entity', and in the Projections tab, select the columns that you want to extract by dragging and dropping them from the Entity->Structure window on the right. Yous can also add filters or sort the result set. Finally, save the consign file. The other component necessary to really extract the data is so-chosen configuration. To create it, we select File->New->New Configuration Sorcerer, then we point the consign file that we've created in the previous step and, in the subsequent stride, our endpoint (the Taleo instance that we will extract the data from). Then, on the post-obit screen, in that location are more than extract parameters, like request format and encoding, excerpt file name format and encoding and much more. In nigh cases, using the default values of parameters will let us extract the information successfully, then unless it'due south clearly required, in that location is no need to change anything. So now the configuration file tin can exist saved and the extraction procedure tin start, only by clicking on the 'Execute the configuration' button (on the toolbar just below the primary menu). If the extraction is successful, then all the indicators in the Monitoring window on the right volition turn green, equally in the screenshot below.

|

| Effigy six: TCC successfull extraction |

By using a bat file created during the installation, you can schedule TCC jobs to be executed on a timely footing, using Windows Scheduler, simply it's much more than mutual to accept your OBI / BI Apps (or almost any other DBMS that your arrangement uses as a data warehouse) installed on a Linux / Unix server. This is why nosotros're going to have a look at how to install and set up up TCC in a Linux surround.

Function iii – TCC in a Linux / Unix surroundings:

TCC setup in a Linux / Unix surroundings is a bit more circuitous. To simplify it, we volition use some of the components that were already created and used when we worked with Windows TCC, and although the frontend of the awarding is totally unlike (to exist precise, there is no frontend at all in the Linux version as it's strictly control-line), the manner the information is extracted from Taleo is exactly the aforementioned (using extracts designed as XML files and Taleo APIs). So, after downloading the application installer and data model from edelivery.oracle.com , we install both components. Installation is really just extracting the files, showtime from cipher to tgz, and then from tgz to uncompressed content. But this time, unlike in Windows, nosotros recommend installing (extracting) the awarding kickoff, and so extracting the information model files to an application subfolder named 'featurepacks' (this must be created, information technology doesn't exist by default). It's also necessary to create a subfolder 'organisation' in the application directory. In one case this is done, you can move some components of your Windows TCC case to the Linux one (of form, if you have no Windows motorcar available, yous can create any of these components manually):

| • | Copy file default.configuration_brd.xml from windows TCC/organisation to the Linux TCC/organization |

| • | Copy excerpt XML and configuration XML files, from wherever you had them created, to the main Linux TCC directory |

There are also some changes that need to be made in the TaleoConnectClient.sh file

| • | Ready JAVA_HOME variable there, at the top of the file (just beneath #!/bin/fustigate line), setting it to the path of your Java SDK installation (for some reason, in our installation, organization variable JAVA_HOME wasn't captured correctly past the script) |

| • | In the line below #Execute the customer, after the TCC_PARAMETERS variable, add: ✓ parameters of proxy server if it is to be used: |

-Dhttp.proxyHost=ipNumber –Dhttp.proxyPort=portNumber

-Dcom.taleo.integration.client.productpacks.dir=/u01/oracle/tcc-15A.2.0.twenty/featurepacks

So, in the end, the TaleoConnectClient.sh file in our environment has the following content (IP addresses where 'masked'):

#!/bin/sh JAVA_HOME=/u01/middleware/Oracle_BI1/jdk # Make sure that the JAVA_HOME variable is defined if [ ! "${JAVA_HOME}" ] then echo +-----------------------------------------+ echo "+ The JAVA_HOME variable is non defined. +" echo +-----------------------------------------+ go out i fi # Brand sure the IC_HOME variable is defined if [ ! "${IC_HOME}" ] then IC_HOME=. fi # Bank check if the IC_HOME points to a valid taleo Connect Customer folder if [ -e "${IC_HOME}/lib/taleo-integrationclient.jar" ] so # Define the class path for the customer execution IC_CLASSPATH="${IC_HOME}/lib/taleo-integrationclient.jar":"${IC_HOME}/log" # Execute the customer ${JAVA_HOME}/bin/java ${JAVA_OPTS} -Xmx256m ${TCC_PARAMETERS} -Dhttp.proxyHost=ten.x.x.ten -Dhttp.proxyPort=8080 -Dco m.taleo.integration.client.productpacks.dir=/u01/tcc_linux/tcc-15A.2.0.20/featurepacks -Dcom.taleo.integration.client.i nstall.dir="${IC_HOME}" -Djava.endorsed.dirs="${IC_HOME}/lib/endorsed" -Djavax.xml.parsers.SAXParserFactory=org.apache.xe rces.jaxp.SAXParserFactoryImpl -Djavax.xml.transform.TransformerFactory=net.sf.saxon.TransformerFactoryImpl -Dorg.apache. eatables.logging.Log=org.apache.commons.logging.impl.Log4JLogger -Djavax.xml.xpath.XPathFactory:http://java.sun.com/jaxp/x path/dom=internet.sf.saxon.xpath.XPathFactoryImpl -classpath ${IC_CLASSPATH} com.taleo.integration.client.Customer ${@} else repeat +----------------------------------------------------------------------------------------------- echo "+ The IC_HOME variable is divers as (${IC_HOME}) only does not incorporate the Taleo Connect Client" echo "+ The library ${IC_HOME}/lib/taleo-integrationclient.jar cannot be found. " echo +----------------------------------------------------------------------------------------------- leave 2 fi Once this is set up, we tin also apply the necessary changes to the extract and configuration files, although there is no need to change anything in the extract definition (file blog_article_sq.xml). Let'southward have a quick look at content of this file:

<?xml version="1.0" encoding="UTF-8"?> <quer:query productCode="RC1501" model="http://www.taleo.com/ws/tee800/2009/01" projectedClass="JobInformation" locale="en" mode="CSV-ENTITY" largegraph="true" preventDuplicates="faux" xmlns:quer="http://www.taleo.com/ws/integration/query"><quer:subQueries/><quer:projections><quer:projection><quer:field path="BillRateMedian"/></quer:projection><quer:project><quer:field path="JobGrade"/></quer:projection><quer:projection><quer:field path="NumberToHire"/></quer:projection><quer:projection><quer:field path="JobInformationGroup,Description"/></quer:projection></quer:projections><quer:projectionFilterings/><quer:filterings/><quer:sortings/><quer:sortingFilterings/><quer:groupings/><quer:joinings/></quer:query>

Just by seeing the file nosotros tin effigy out how to add together more than columns manually: we just need to add more than quer tags, like

<quer:projection><quer:field path="DesiredFieldPath"/></quer:projection>

With regard to the configuration file, we demand to make some small changes: in tags cli:SpecificFile and cli:Binder absolute Windows paths are used. In one case we move the files to Linux, nosotros need to replace them with Linux filesystem paths, absolute or relative. Once the files are prepare, the only remaining task is to run the extract, which is done by running:

./TaleoConnectClient.sh blog_article_cfg.xml

Meet the execution log:

[KKanicki@BIApps tcc-15A.2.0.twenty]$ ./TaleoConnectClient.sh blog_article_cfg.xml 2017-03-xvi xx:18:26,876 [INFO] Client - Using the post-obit log file: /biapps/tcc_linux/tcc-15A.2.0.20/log/taleoconnectclient.log 2017-03-16 xx:18:26,876 [INFO] Client - Using the post-obit log file: /biapps/tcc_linux/tcc-15A.2.0.20/log/taleoconnectclient.log 2017-03-16 20:18:27,854 [INFO] Client - Taleo Connect Client invoked with configuration=blog_article_cfg.xml, request message=null, response message=zippo 2017-03-16 20:18:27,854 [INFO] Client - Taleo Connect Client invoked with configuration=blog_article_cfg.xml, request message=goose egg, response message=zero 2017-03-sixteen twenty:18:31,010 [INFO] WorkflowManager - Starting workflow execution 2017-03-16 xx:18:31,010 [INFO] WorkflowManager - Starting workflow execution 2017-03-16 20:18:31,076 [INFO] WorkflowManager - Starting workflow step: Set up Consign 2017-03-16 20:18:31,076 [INFO] WorkflowManager - Starting workflow step: Prepare Export 2017-03-16 20:18:31,168 [INFO] WorkflowManager - Completed workflow step: Set Export 2017-03-16 xx:xviii:31,168 [INFO] WorkflowManager - Completed workflow step: Prepare Export 2017-03-16 20:18:31,238 [INFO] WorkflowManager - Starting workflow pace: Wrap Soap 2017-03-16 20:xviii:31,238 [INFO] WorkflowManager - Starting workflow step: Wrap SOAP 2017-03-16 20:xviii:31,249 [INFO] WorkflowManager - Completed workflow stride: Wrap SOAP 2017-03-16 20:18:31,249 [INFO] WorkflowManager - Completed workflow stride: Wrap Lather 2017-03-xvi 20:xviii:31,307 [INFO] WorkflowManager - Starting workflow footstep: Send 2017-03-16 20:18:31,307 [INFO] WorkflowManager - Starting workflow step: Send 2017-03-sixteen 20:18:33,486 [INFO] WorkflowManager - Completed workflow step: Send 2017-03-16 20:xviii:33,486 [INFO] WorkflowManager - Completed workflow step: Send 2017-03-xvi 20:18:33,546 [INFO] WorkflowManager - Starting workflow footstep: Poll 2017-03-sixteen xx:18:33,546 [INFO] WorkflowManager - Starting workflow step: Poll 2017-03-16 twenty:18:34,861 [INFO] Poller - Poll results: Request Message ID=Export-JobInformation-20170316T201829;Response Message Number=123952695;State=Completed;Record Count=ane;Record Alphabetize=1; 2017-03-16 20:eighteen:34,861 [INFO] Poller - Poll results: Request Message ID=Export-JobInformation-20170316T201829;Response Message Number=123952695;State=Completed;Record Count=i;Record Index=1; 2017-03-xvi twenty:xviii:34,862 [INFO] WorkflowManager - Completed workflow stride: Poll 2017-03-16 20:xviii:34,862 [INFO] WorkflowManager - Completed workflow step: Poll 2017-03-16 xx:18:34,920 [INFO] WorkflowManager - Starting workflow step: Recollect 2017-03-16 20:eighteen:34,920 [INFO] WorkflowManager - Starting workflow pace: Call up 2017-03-16 xx:18:36,153 [INFO] WorkflowManager - Completed workflow step: Retrieve 2017-03-16 20:18:36,153 [INFO] WorkflowManager - Completed workflow step: Retrieve 2017-03-16 20:18:36,206 [INFO] WorkflowManager - Starting workflow step: Strip SOAP 2017-03-16 20:18:36,206 [INFO] WorkflowManager - Starting workflow step: Strip Lather 2017-03-16 20:18:36,273 [INFO] WorkflowManager - Completed workflow footstep: Strip SOAP 2017-03-16 20:eighteen:36,273 [INFO] WorkflowManager - Completed workflow footstep: Strip SOAP 2017-03-16 xx:18:36,331 [INFO] WorkflowManager - Completed workflow execution 2017-03-sixteen 20:18:36,331 [INFO] WorkflowManager - Completed workflow execution 2017-03-sixteen 20:18:36,393 [INFO] Client - The workflow execution succeeded.

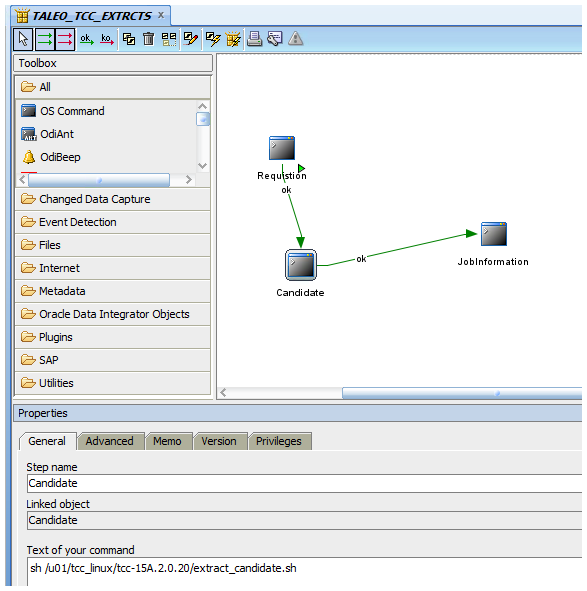

And that's information technology! Assuming our files were correctly prepared, the extract will be ready in the folder alleged in cli:Folder tag of the configuration file. Equally for scheduling, unlike approaches are available, the almost bones beingness to employ the Linux crontab as the scheduler, simply y'all can also use any ETL tool that is used in your project easily. See the screenshot beneath for an ODI case:

|

| Figure 7: TCC extracts placed into one ODI bundle |

The file extract_candidate.sh contains simple call of TCC extraction:

[KKanicki@BIApps tcc-15A.2.0.20]$ true cat extract_candidate.sh #!/bin/bash cd /u01/tcc_linux/tcc-15A.ii.0.twenty/ ./TaleoConnectClient.sh extracts_definitions/candidate_cfg.xml

If your extracts fail or you have whatsoever other issues with configuring Taleo Connect Customer, feel free to enquire u.s. in the comments section beneath! In the final couple of years we take delivered several highly successful BI Projects in the Human Resources / Human Upper-case letter infinite! Don´t hesitate to contact us if you would similar to receive specific data about these solutions!

Source: https://www.clearpeaks.com/extracting-data-with-taleo/

0 Response to "Taleo Error Invalid Data Review All Error Messages Below to Correct Your Data"

Post a Comment